Octave [1] is a great platform to perform Machine Learning tasks. Octave run time performance can be improved by adding OpenBlas [2] and by increasing the number of threads Octave can run using. This can be further improved using NVIDIA's cuBLAS [3]. The following steps would work in Ubuntu OS.

For the purposes of this test, the matrix multiplication Octave code from [3] was used. To increase the number of threads and using OpenBlas, run the octave code in a command line in a terminal as:

The performance is best when OMP_NUM_THREADS=#coresOnTheMachine (Obviously.).

[2] OpenBlas: http://www.openblas.net/

[3] Drop-in Acceleration of GNU Octave. https://devblogs.nvidia.com/parallelforall/drop-in-acceleration-gnu-octave/

For the purposes of this test, the matrix multiplication Octave code from [3] was used. To increase the number of threads and using OpenBlas, run the octave code in a command line in a terminal as:

- export LD_LIBRARY_PATH=/opt/OpenBLAS

- OMP_NUM_THREADS=<NumThreads> LD_PRELOAD=/opt/OpenBLAS/lib/libopenblas.so octave code.m

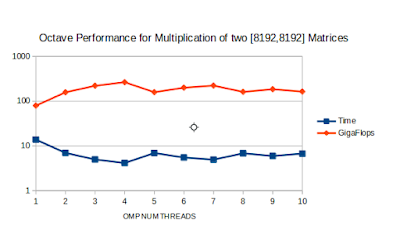

Results

|

| Matrix Multiplication without OpenBlas. Note: Y-axis is in log scale. |

|

| Improved Performance Using OpenBlas. Note: Y-axis is in log scale. |

The performance is best when OMP_NUM_THREADS=#coresOnTheMachine (Obviously.).

References

[1] GNU Octave: https://www.gnu.org/software/octave/[2] OpenBlas: http://www.openblas.net/

[3] Drop-in Acceleration of GNU Octave. https://devblogs.nvidia.com/parallelforall/drop-in-acceleration-gnu-octave/

No comments:

Post a Comment